Pharma needs to get out of its comfort zone with AI and digital health

Patient odysseys, pilotitis and vendor clutter, were the meaningful descriptors at the Digital Health and AI Innovation Summit in Boston (DHAI), summarising the state of AI and digital in Pharma.

This is a monthly newsletter of Faces of Digital Health - a podcast that explores the diversity of healthcare systems and healthcare innovation worldwide. Interviews with policymakers, entrepreneurs, and clinicians provide the listeners with insights into market specifics, go-to-market strategies, barriers to success, characteristics of different healthcare systems, challenges of healthcare systems, and access to healthcare. Find out more on the website, tune in on Spotify or iTunes.

Let’s start with a glossary:

Vendor clutter - When vendors sell solutions they have, or think the market needs, instead of solving real problems.

Pilotitis - Doing pilot projects that don’t translate to everyday practice.

Patient odyssey - Many patients don’t experience a typical patient journey; instead, they go through an odyssey of navigating complex care systems.

As a chronic patient, I feel immense gratitude for the innovations in drug development. I’m alive thanks to Pharma and it is amazing to see how far we’ve come in gene therapies, biologics, drug repurposing to address more than one need, or “simple” things such as oral laxative formulas that you need to take before your colonoscopy (trust me, there's a big difference between having to drink several litres of what tasted like salt water the day before the procedure 20 years ago, and drinking a relatively palatable solution the evening before colonoscopy in 2024 …). I’m cautiously optimistic about the rise of platforms for in-silico (AI-driven) drug discovery.

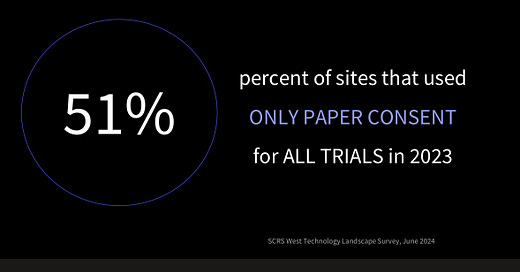

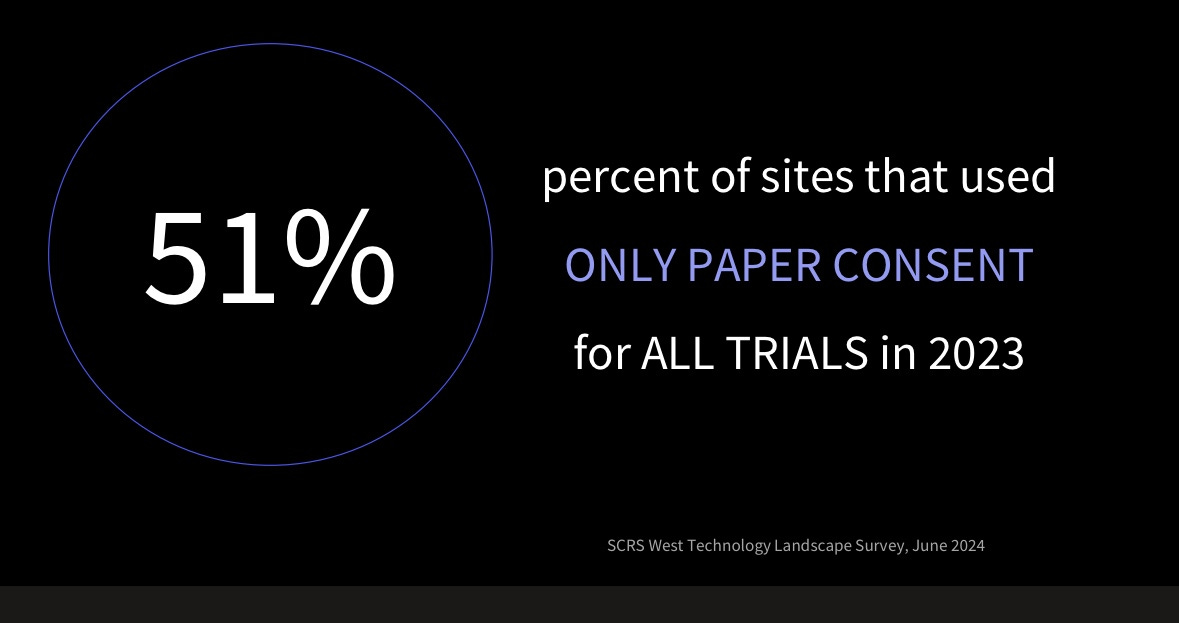

But under this promising progress, Pharma struggles to fully embrace digitalization. As Craig Lipset, a former Pharma executive and leader in clinical research and medicine development, mentioned at DHAI, even the simplest digital solutions, such as eConsent (digitized consent forms for clinical trials), were only used in 49% of clinical trials in 2023 (see his slides with sources below).

Despite regulatory guidance and patient preferences for eConsent, its use has declined since the pandemic. Digital data enables better compliance monitoring, yet Pharma isn't fully capitalizing on it.

Craig Lipset believes regulation is often used as an excuse for delaying innovation. Many digital solutions are already feasible within regulatory frameworks—what’s missing is bold leadership.

A company showing just that, might be Otsuka Pharmaceuticals. Otsuka is notable for two reasons: first, it invested in Proteus, a digital health unicorn that developed a sensor to track adherence to schizophrenia medications. In 2017, this led to the first FDA-approved digital pill, which sparked debate about its long-term success, particularly for this indication.

To surprise of many, in early 2020, Otsuka pulled the plug on financing Proteus, forcing the company to file for bankruptcy. Now, in 2024, Otsuka has launched a digital therapeutic (DTx) for major depressive disorder. According to the company, the DTx comes with a low co-payment to enhance accessibility. Otsuka acknowledges that this therapy will initially incur financial losses, but we will have to wait to see how successful the support for this therapy turns out to be.

Moreover, Otsuka made another move worth keeping an eye on: the company partnered with Amalgam. In October 2024 they released an app for caregivers of patients with Alzheimer’s disease. This is a free app available in the US market, but more importantly, it doesn’t address patients directly, but caregivers - a market niche that is continuously overlooked in healthcare, even though we know that ‘when a patient gets sick, the whole family gets sick’.

How are wearables used for research and in clinical trials?

Pharma is utilizing digital tools in several ways to measure clinical endpoints. For example, wearables that continuously track gait can detect motor function decline faster than standard in-office tests, such as the 10-meter walk test (a widely used measure of gait speed in Parkinson’s disease). Real-life mobility data from smartphone apps can also identify fall risks, which could serve as an early warning for fall risk prevention measures.

Real-world data (RWD) is often seen as the true test of clinical innovation, as it reflects less-controlled, everyday conditions. Health monitoring sensors embedded in rings, jewelry, watches, bands, smartphones, and IoT devices collect RWD passively, making it a potential goldmine for future discoveries. However, as one speaker at DHAI pointed out, RWD in research often isn’t used for it was gathered for. When patients monitor their health they might be inconsistent in data gathering. RWD is messy and requires careful interpretation. For instance, motor function on the right wrist can’t be measured accurately if the wearable is on the left wrist.

From the user’s perspective, simply collecting data without meaningful feedback can induce more anxiety than improve well-being (see the humorous take from Gary Monk below).

The lack of consumer insights is a well-recognized problem, and AI is stepping in to address it. For example, sleep tracking apps can now offer insights into sleep quality and readiness scores, while apps can nudge users to move when they’ve been sitting for too long. And now, generative AI is also playing a role.

It seems patients are making the most out of AI

Patients use generative AI in several ways: to interpret their medical results, to translate medical jargon, to prepare for medical checkups and more.

That said, most patients aren’t scientists, and they may not recognize when AI-generated responses are inaccurate. It’s essential to use AI cautiously, keeping data security in mind and consulting healthcare professionals before making any decisions. Patient advocates Grace Vinton and Grace Cordovano recommend these steps for safely using generative AI:

Start with Questions: Use AI tools to prepare for medical visits by asking them to generate lists of questions based on your symptoms or diagnosis. This can help you make the most of your time with healthcare professionals.

Refine Prompts: Developing good prompts is crucial for getting the most useful responses from AI. Be specific, and don’t hesitate to refine and adjust prompts to get better results. For example, if you are using AI to interact with an insurance to appeal a denied claim, make sure to describe the situation and your goal (convincing appeal) to AI.

Check for Errors: AI can help patients review their medical records for potential errors or duplications, which can improve the accuracy of their care.

To add to that: keep in mind that AI has a specific writing style, and many can now recognize when content is AI-generated. Use AI as a tool for information but always consult professionals (doctors, lawyers, etc.).

What to be mindful of when using AI:

Security and Data Privacy: Patients should be cautious about sharing personal health information with AI tools, especially with open platforms like ChatGPT. Avoid sharing detailed medical results unless you're sure about the platform’s privacy policies.

AI Limitations and Hallucinations: AI can sometimes produce incorrect information (known as hallucinations).

Confirmation Bias: Be mindful of relying too heavily on AI or the internet for self-diagnosis, as this can lead to confirmation bias. Always consult with a doctor to verify the information.

How are we getting along with AI regulation?

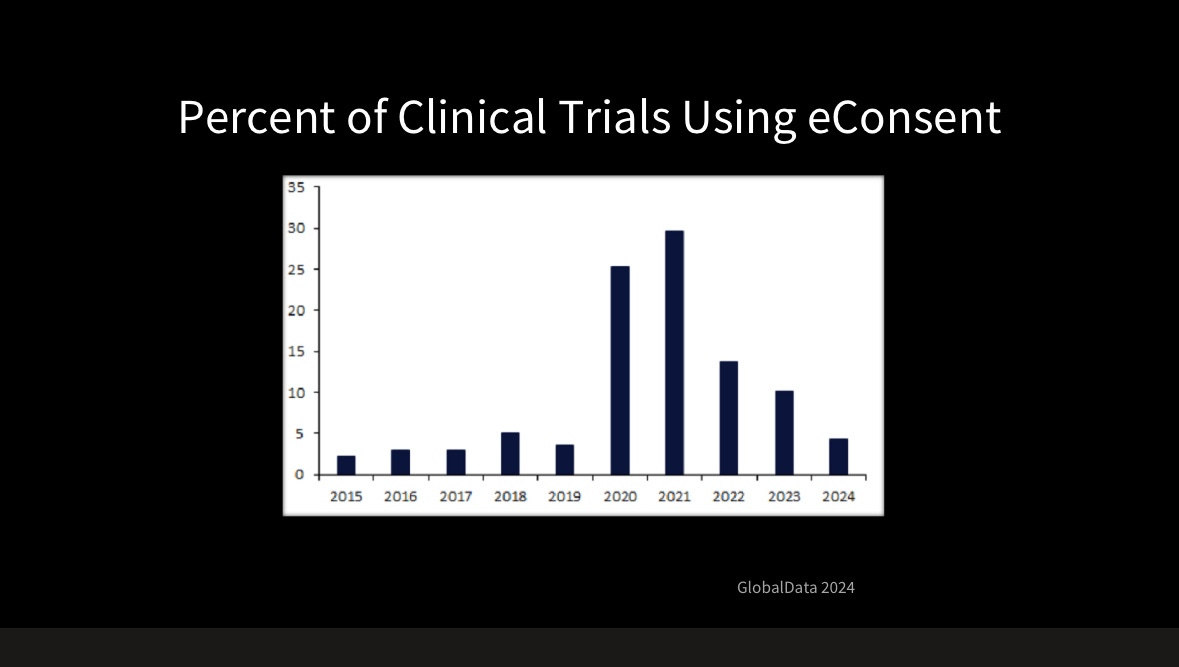

Ever heard the joke, “The good thing about data standards is that there are so many to choose from”? (Cue sarcasm.) The same issue is cropping up in AI regulation. In the U.S., there’s CHAI - Coalition for responsible use of AI, which will provide Assurance labs across the US to test and validate AI models:

The overview of AI assessment process in Assurance labs under CHAI. Source: CHAI

There’s TRAIN - Trustworthy & Responsible AI Network (TRAIN), which was announced at HIMSS Global 2024, and aims to operationalize responsible AI principles to improve the quality, safety and trustworthiness of AI in health.

Working globally is Health AI - The Global Agency for Responsible AI in Healthcare, and HIPPO AI Foundation, which fosters and advocates for open AI models in healthcare and much much more.

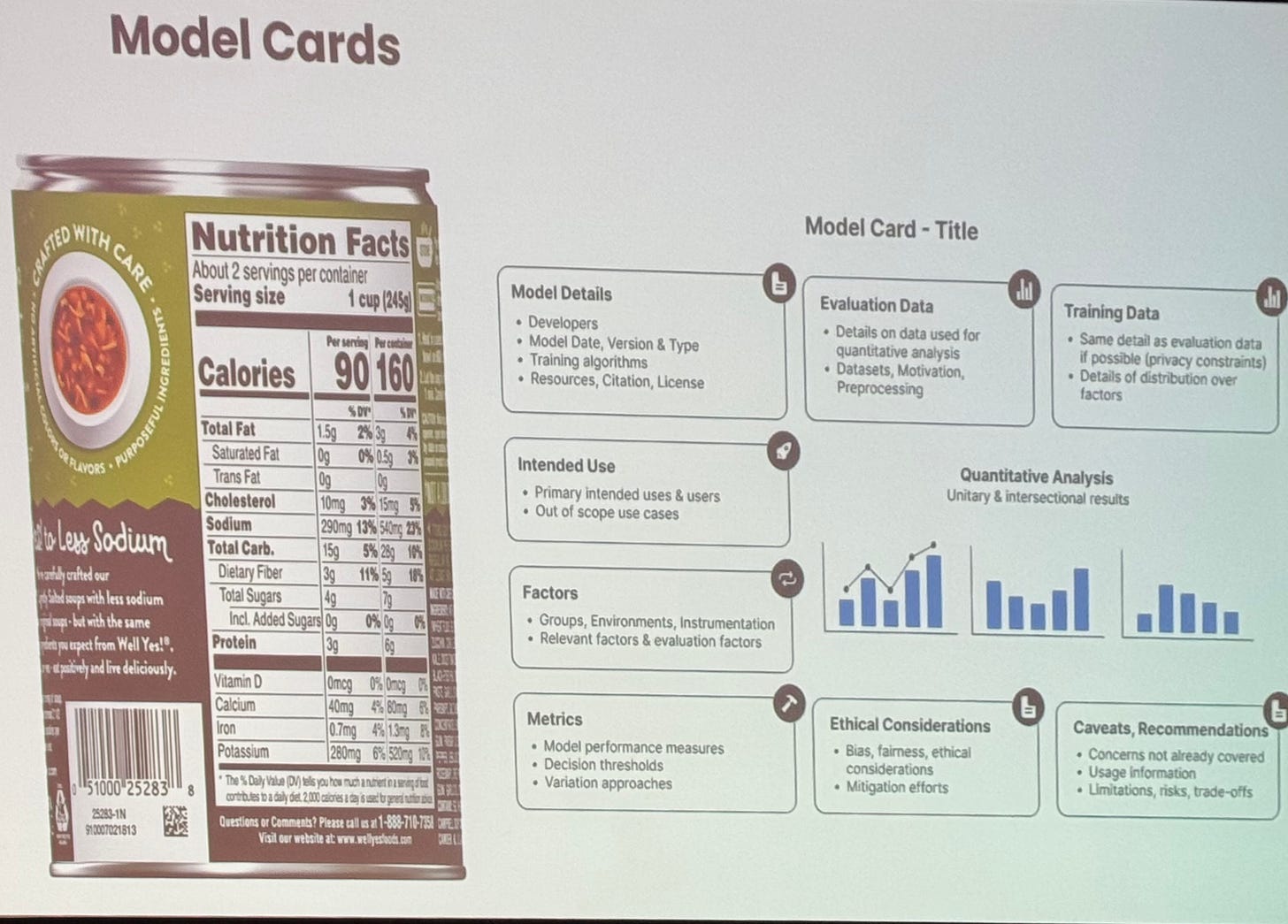

What’s universally agreed upon is that AI must be regulated, tested, and made transparent to some extent. As Brian Anderson, CEO of CHAI, remarked, AI should have “food labels” that inform users about model details, intended use, data used for training, ethical considerations, and more.

Slide by Brian Anderson, CEO of CHAI, at DHAI.

In conclusion, and shifting the focus back on Pharma: if eConsents are a challenge for Pharma, I guess we don’t have to worry about AI being adopted at scale in the industry soon.

A longer interview with Brian Anderson about Assurance labs and CHAI will be published soon on Faces of digital health podcast. Untill then, tune into the episode about AI development globally with John Halamka (iTunes, Spotify).

See you in March?

San Diego will welcome medicine, healthtech and innovation trailbraizers between 30 March and 2 April. Program will cover topics such as Exponential Technologies Update, AI & GPT Infused Health & BioMedicine, Hospital to Home, Catalyzing Healthspan, Where Technology meets Policy, Planetary Health, and much more.

Learn more on NextMed Health Website or Watch last year’s recording on their Youtube Channel.