How AI Changed Healthcare in 2023

Generative AI is reducing clinician burnout, and the shift towards value-based care is making healthcare ripe for AI interventions.

This is a monthly newsletter of Faces of Digital Health - a podcast that explores the diversity of healthcare systems and healthcare innovation worldwide. Listeners get equipped with insights into market specifics, go-to-market strategies, barriers to success, characteristics of different healthcare systems, challenges of healthcare systems, and access to healthcare. Find out more on the website, Linkedin page, tune in on Spotify or iTunes.

Each year, the focus of excitement in digital health innovation shifts to a different area. In 2016/2017 it was mobile apps. I even recorded a documentary about the hopes and hypes around digital health, to figure out how big of an impact can we expect from mobile health apps. Then it was AI. In 2017/2018 a plethora of blockchain startups popped up, promising transparency, compensation with tokens for medical data sharing, and bigger safety of healthcare data among other things. When the pandemic struck, it seemed healthcare would forever shift from in-person consultations to a predominately telemedicine-centric model.

In 2023, it seems that all we hear about is generative AI. However, if we look at the data and reports, several things become apparent.

We’re in the early stages of the use of generative AI in healthcare.

Let’s rewind for a year.

The broader public became aware of the power of generative AI and what it looks like with the launch of ChatGPT in November 2022. Just one week following its widespread release to the general public, ChatGPT achieved a milestone by attracting 1 million users. After a month, it had 100 million users, and in November 2023 the number of users was 180 million.

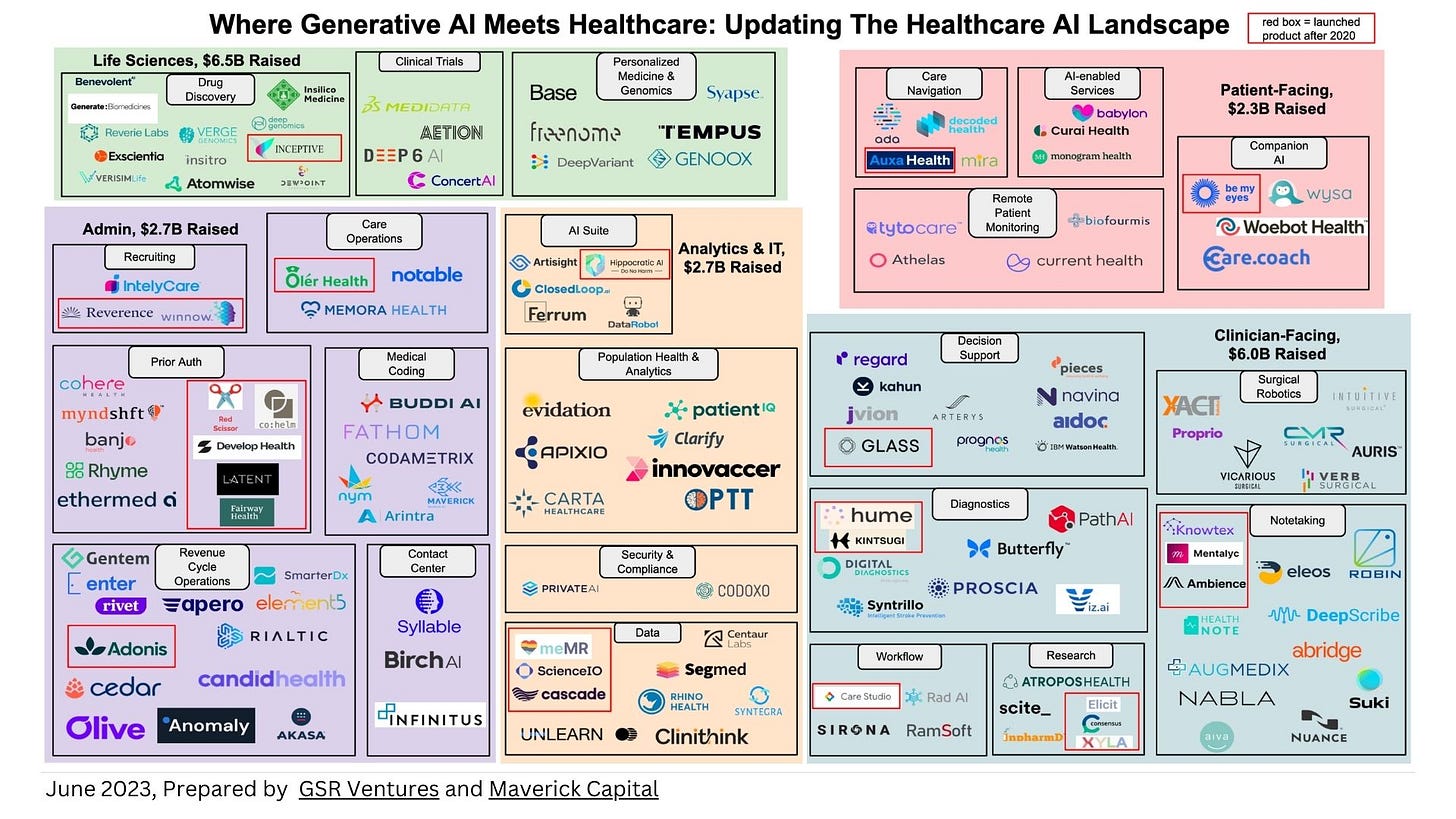

Many of the digital health companies have been using generative AI well before 2023. GSR Ventures and Maverick Capital prepared a nice overview of generative AI healthcare companies, putting those that launched their products after 2020 in red brackets:

In essence: many players have been here for a while.

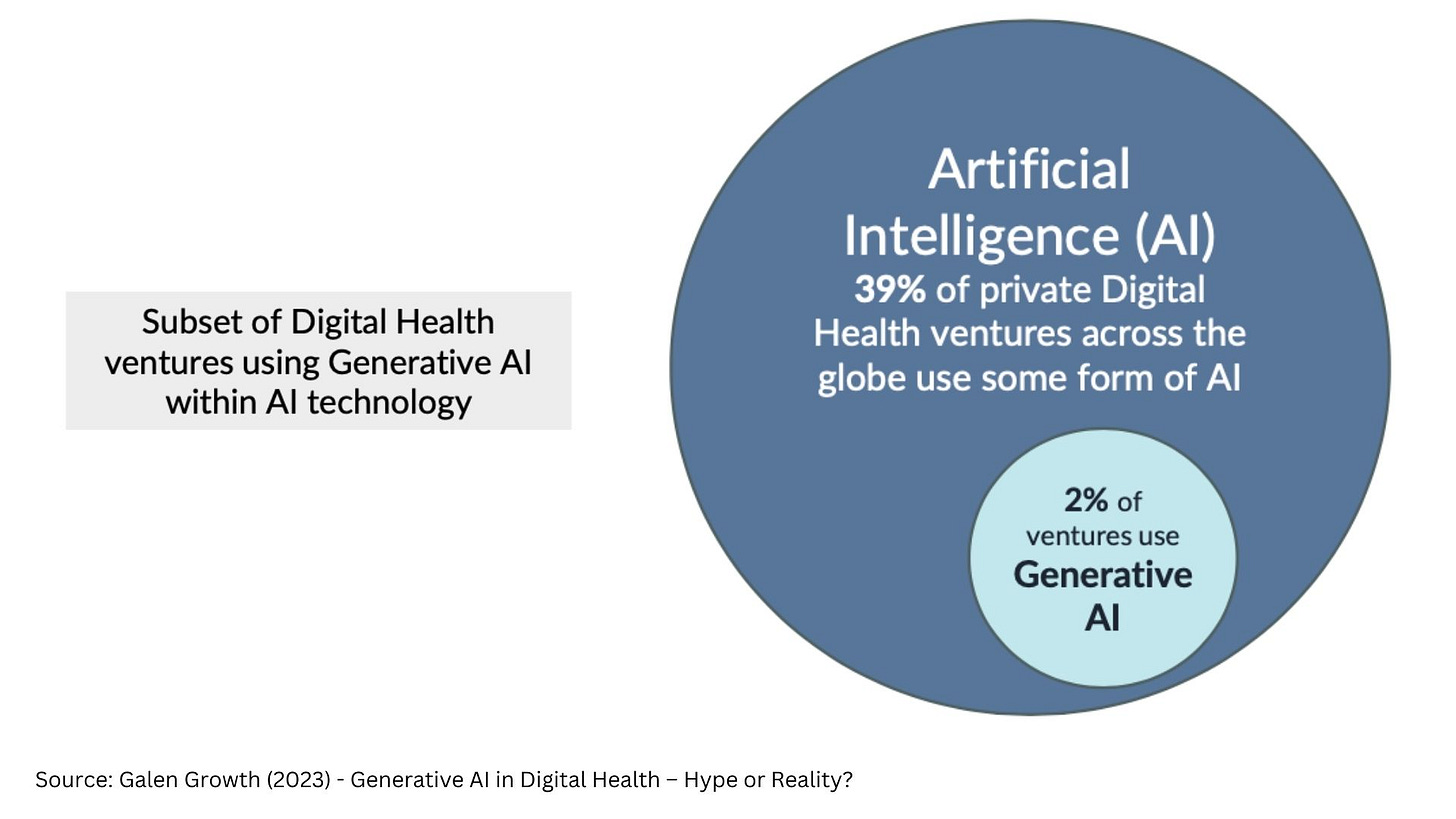

According to Galen Growth report on generative AI in healthcare, only 2% of digital health ventures that use AI, also use generative AI. However, 72% of digital health startups working in biotech use generative AI.

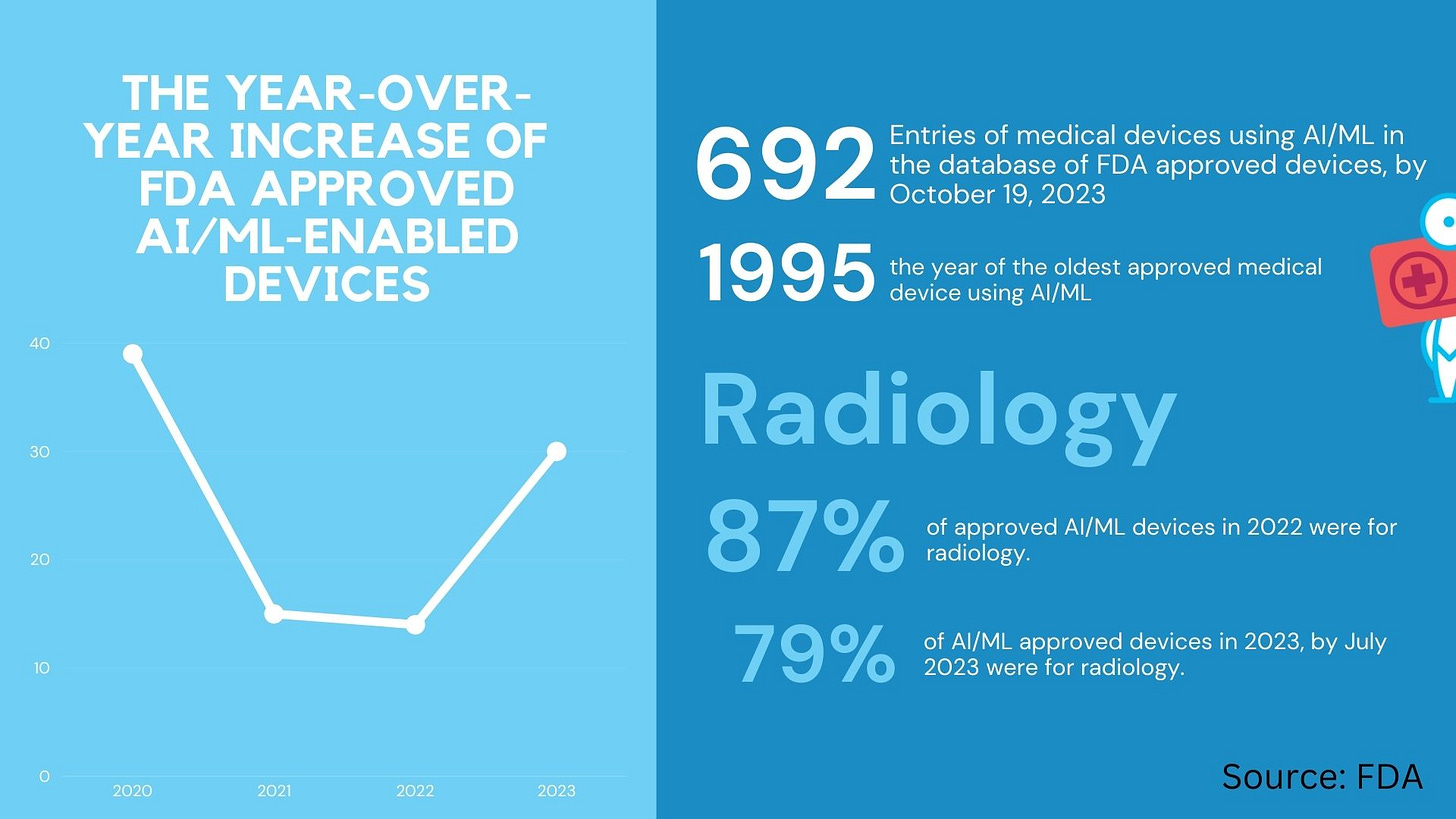

Beyond generative AI, “old” AI is on the rise, with ups and downs in the growth of new FDA approved devices. The FDA database of AI/machine learning-enabled algorithms had almost 700 entries in October 2023. The majority of approved algorithms are used in radiology. And by majority, I don’t mean a bit more than 50% but actually 87% of all approved AI/ML devices in 2022 and 79% in 2023. The year-over-year increase of FDA approved AI/ML-enabled devices was at 39% in 2020, fell to 14% and 15% in 2021 and 2022, and is predicted to be 30% in 2023.

All these algorithms aim at increasing efficiency. For example, in June the company Ezra got a 510(k) clearance for their algorithm, which makes Full Body MRI, which previously took one hour, now available as a 30-minute MRI scan. (BTW 510(k) clearance doesn’t refer to completely novel devices but is a clearance for devices that are equivalent to a device already placed into one of the three classification categories on the market).

GenAI Brough Hope to Healthcare

Regardless of only 2% of digital health ventures using generative AI, 2023 was the year of generative AI even in healthcare. It brought hope that we are close to getting the tech right in healthcare instead of causing EHR-related clinician burnout and PTSD from overwhelming administrative tasks in collecting healthcare data. Doctors gave a warm welcome to AI scribes. These generative AI-powered assistants are capable of recording, writing, and structuring medical notes in real-time during patient visits, signifying a major step forward in healthcare technology. At the moment, that’s about it, as far as making doctors’ life easier goes.

Beyond Generative AI: The Flashy and the Ugly Side of AI in 2023

Among the flashy news of AI-powered digital health innovations was definitely the launch of Forward Health CarePods - self-sufficient pods deployed in malls, gyms, and offices in the US. The pods offer members willing to pay a 99$ monthly membership fee to get a full body scan, heart health, thyroid testing, blood pressure, weight management, diabetes screening, COVID-19 test, HIV screening, and kidney, and liver health. The results of the tests are seen by a doctor in the background, who can then write a prescription if needed.

Among the Black Mirror type of AI-related revelations in 2023 was the news that UnitedHealths pushed employees to adhere to the recommendations of a flawed algorithm, leading to numerous patients being unjustly denied rehabilitation care they needed. It was an example of the greatest fear of AI coming true.

AI algorithms are usually trained on historic data and can be prone to errors when applied to real-world data. A good step towards mitigating errors would be to use the AI outputs only as a guiding principle, where a human with proper expertise can still decide differently to an algorithmic recommendation. However, as reported by STAT, UnitedHealth employees were directed to keep patients rehab stays within 1% of the rehabilitation days projected by the algorithm. Technically speaking the algorithm did what it was supposed to do: it “optimized” care - if we looked at optimization through the insurance cost reduction and profit increase lens. Patients paid the price and now UnitedHealth faces class action lawsuit. Which brings up the natural question: how do we regulate AI so that regulation doesn’t stifle innovation but also doesn’t harm patients?

Regulation TL/DR 2023: work in progress

The pioneering legislative piece - the EU AI Act was published 2 years ago. The premise is simple: Categorize AI application into four categories, the highest level of regulation for those algorithms that could be used for discrimination such as the Chinese social scoring system. But 2021 feels like a distant memory and with the availability of ChatGPT a whole new spectrum of questions arose around AI, and how would it be possible to regulate it. Unlike in machine learning, generative AI by definition generates something new with each prompt, which makes it very difficult to compare outputs and their accuracy. Hence the discussions about the right approach are still open.

In the US, the National Institute of Standards and Technology (NIST) published a Voluntary AI Risk Management Framework, which can act as a guideline to the industry. Then in October, the Biden Administration released and signed an Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. In healthcare, it requires Health and Human Services (HHS), in consultation with the Secretary of Defense and the Secretary of Veterans Affairs, to establish an “HHS AI Task Force” by January 28, 2024. The HHS AI Task Force will have a year to develop a regulatory action plan for predictive and generative AI-enabled technologies in health care.

We’ll check-in in 2025.

What do the Experts Say

Now that you have the overview, here’s how experts from the industry commented the current state of AI in healthcare.

In a live panel discussion podcast recording in November I spoke with:

Shweta Maniar, Global Director, Life Sciences Solutions and Strategy for Google Cloud,

Rachel Dunscombe, CEO of openEHR International Foundation,

Kira Radinsky is CEO and CTO of Diagnostic Robotics,

Harvey Castro, MD, MBA., Physician, Speaker, Strategic Advisor for ChatGPT & Healthcare.

You can watch the video recording:

Where Can We See AI in Practice?

… For Personal Use

Medical students are using ChatGPT for education purposes - to create summaries or turn text into mp3 files they can listen on their commutes.

… For Client Management

Diagnostic Robotics uses generative AI to suggest care managers how to respond to a specific patient when they’re trying to convince them in an emphatic way to take preventive care measures. Instead of completely replacing people with bots, which many users still don’t like, people talking to patients can get additional ideas about how else to address individual’s concerns.

… For Precision Medicine

Google's platform is being used by various organizations to create solutions for personalized medicine. For instance, Google introduced the medical imaging suite, an AI-assisted diagnostic tool used by Hologic for cervical cancer diagnosis and by Hackensack Meridian Health for detecting metastasis in prostate cancer patients. In the pharmaceutical sector, Google has launched AI-powered solutions to accelerate drug discovery, including suites for early research, target identification, and multi-omics, integrating AI with other advanced technologies. This focus on precision medicine considers individual variability in genetics, environment, and lifestyle to support personalized patient care, aiming to improve diagnosis, prevention, and treatment plans. Shweta Maniar hopes these technologies will also contribute to greater equity in healthcare.

Promising Times For Value Based Care

Healthcare systems globally are slowly maturing in digitalization. At the same time we are watching mounting worries about escalating healthcare expenses. Consequently, we have no choice but to find inventive strategies to preserve health and manage costs effectively. This is precisely where value-based care comes in, and where AI will pay a crucial role, said Kira Radinsky, CEO and CTO of Diagnostic Robotics. “Value-based care is a move towards paying clinicians based on patient outcomes, in contrast to the fee-for-service model. In other words, if patients are healthy, you make more money. This requires a lot of AI.” Primary care physician have a few thousand assigned patients and it’s impossible to continuously monitor all of them. When patients leave the doctor’s office, doctors get completely disconnected from what is happening with patients. “Here comes AI. How can I allow you to identify patients who are deteriorating so you can start interventions before they become sick,” said Kira Radinsky. To build population health predictive models, Diagnostic Robotics obtained more than 60 billion historical claims, and almost 100 million medical records, to identify patterns of care management for different patients. “After 15 years of only documenting data, I think this is the first two years when you started seeing a shift where physicians will only be incentivized if the patients are healthy and not only based on documentation and services they provide. In other words, the environment is ripe for an AI intervention.”

Diagnostic Robotics is based in Israel and operates in the US, Israel, Brazil and South Africa. While their American healthcare provider customers still have headaches due to their healthcare data decentralization, and challenges in combining patient data from different sources, countries such as Israel or even South Africa, have centralized data centers with a lot of data and a lot of opportunities to build models on top of that data, she also said.

The Data Question in Generative AI

Good data is the basis for good AI. However, challenges with healthcare data are far from solved. Rachel Dunscombe emphasized we still have a lot of work to do in unifying how we record data. “We've got a data legacy problem, a technical debt that we've built in where we have far too many data models. Blood pressure, for instance, in the UK is recorded in hundreds of different formats. So from my perspective, we need the foundations to be right. Part of this is not to rush, and sure we have the right data foundations,” she said.

Shweta Maniar echoed the need for caution, despite the excitement of the general public and the fact that it can be slow and time-consuming to do AI right. “We need to be slow and methodical. You want to focus on keeping the human in the loop as you're developing this technology and doing proof of concepts,” she said.

ChatGPT, Bard or Claude have become essential tools for many people, helping them draft messages, marketing ideas, strategies, and more. Developers use it to check their code. So what role do data standards play in the works where generative AI can structure data? At this point, especially as assistants. “Generative AI has got a role in being part of the deciding proposing, peer reviewing of data structures for the future. I think that is absolutely logical. It's just going to add power to us getting the data right,“ said Rachel Dunscombe.

Regulation: Food Labels for AI

Among clinicians, the impressions of generative AI are mixed. There’s early adopters and there are clinicians that say they will retire early if these technologies get introduced in their clinical practive, said Harvey Castro. Fear from AI comes from lack of understanding and transparency where AI is used and how. A potential solution could be to add marks sucks as food labels to AI products. “When a patient comes to the doctor, they need to know that AI is being used and then vice versa. When the doctor goes to work, they need to know that the hospital picked the AI and which AI and what's going on. With labels me as a doctor, I can know that this particular AI has this bias, has these issues, and that way we can address it,” said Harvey Castro, expressing hope that larger companies in the AI space will be cautious. Smaller players might not be. “I'm actually more worried about the one off companies that may not really fully understand these bylaws and use some of these APIs to start creating products that really aren't safe or that aren't even regulated by the FDA where they should be regulated by the FDA,” he elaborated.

Rachel Dunscombe also sees food labels a good approach forward. We also need a comprehensive approach to integrate AI into healthcare, driven by user-centered design, and looking at parallels in the analogue world. Who can one turn to, if an AI seems to be off? “We need to know how do you report an issue? How do challenge something? In a human place, you'd ask for the supervisor, the ward manager, whatever else. How do we get that equivalent?” she illustrated, emphasizing that the service design aspects need to be considered everything from the citizen to the clinician, because it's not just about the AI, it’s about reimagining healthcare.

The Future is … Bright!

As 2023 draws to a close, what can we expect in the upcoming years?

Better interaction with the healthcare system

Shweta Maniar sees opportunities in healthcare optimisation, with better information flow between patients and healthcare systems. “Humans want greater interaction with their health care system, but it doesn't necessarily mean they want increased human interaction,” she said.

Equity

Rachel Dunscombe hopes we will be able to curb the spending curve in healthcare and use large language models to improve equity, to make things multi-language, and to make the advice applicable to people in a specific household or context.

Precision medicine

In life-sciences, Shweta Maniar expects further advancements in drug development.“We can have enhanced chatbot interactions for general health questions for first-line triage, but where I see things are going to be going is how this can support advanced research and development to support how researchers are going to identify are the future therapy candidates using different simulations and aggregated population data and using LLMs to mine large trails of research data,” she said.

Speed and efficiency

Kira Radinsky is looking at the potential from the clinicians’ side, expecting new AI capabilities to help clinicians make faster but still accurate calls on patient care. “My biggest belief is specifically in the preventive care space where there's a lot of buildup of relationship and obtaining data and then summarizing it to a physician to make a quick call. The more those tools can help make this efficient, the bigger the implications are going to be on the burnout of physicians.”

Personalized health

Harvey Castro has high hopes for a move forward to preventative medicine, with personalized approached on an individual level. He believes large language models will play a pivotal role at analyzing individual DNA profiles to identify specific health needs, which will lead to highly personalized medical treatment. This could result in a more targeted approach to medication, where, instead of a standard dose, a person might need a significantly lower dosage of a medication, or perhaps none at all, due to proactive lifestyle adjustments. The overarching vision is a healthcare future focused on preventing illness before it starts.

We have reasons to be optimistic.