Natural Language Processing Is The Assistant Healthcare Has Been Waiting For

Tech solutions are increasingly better at symptom checking, turning voice notes to structured data, and automating processes while conversing with the users in natural sounding human language.

This is a monthly newsletter of Faces of Digital Health - a podcast that explores the diversity of healthcare systems and healthcare innovation worldwide. Interviews with policymakers, entrepreneurs, and clinicians provide the listeners with insights into market specifics, go-to-market strategies, barriers to success, characteristics of different healthcare systems, challenges of healthcare systems, and access to healthcare. Find out more on the website, tune in on Spotify or iTunes.

Imagining a world where a computer or a device would accurately diagnose your health-related issues just by scanning you is an old futuristic idea. We’re not there yet, and most decision support systems for navigating care or physician decision-making are equipped with disclaimers regarding their reliability.

Nonetheless, technology is progressing fast and we are witnessing increasingly impressive algorithms and AI. The latest one that has gained enormous public attention because of its accessibility is ChatGPT - a large language model that is able to generate human-like textual responses to a variety of user prompts.

Up until ChatGPT, most chatbots were clunky, rigid, and very limited in their understanding of human language. ChatGPT on the other hand has so well-crafted human-like responses that a discussion with it is actually very pleasant.

Symptom Checking, Voice Recognition, Data Structuring, and Conversational AI

Well-known applications of chatbots for healthcare are symptom-checking solutions. These might or might not be based on conversational AI. In many cases, they aim to narrow down possible causes of health issues by taking the user through a case-specific dynamic set of questions (below an example of the Ada Health app whose AI is based on a probabilistic reasoning engine).

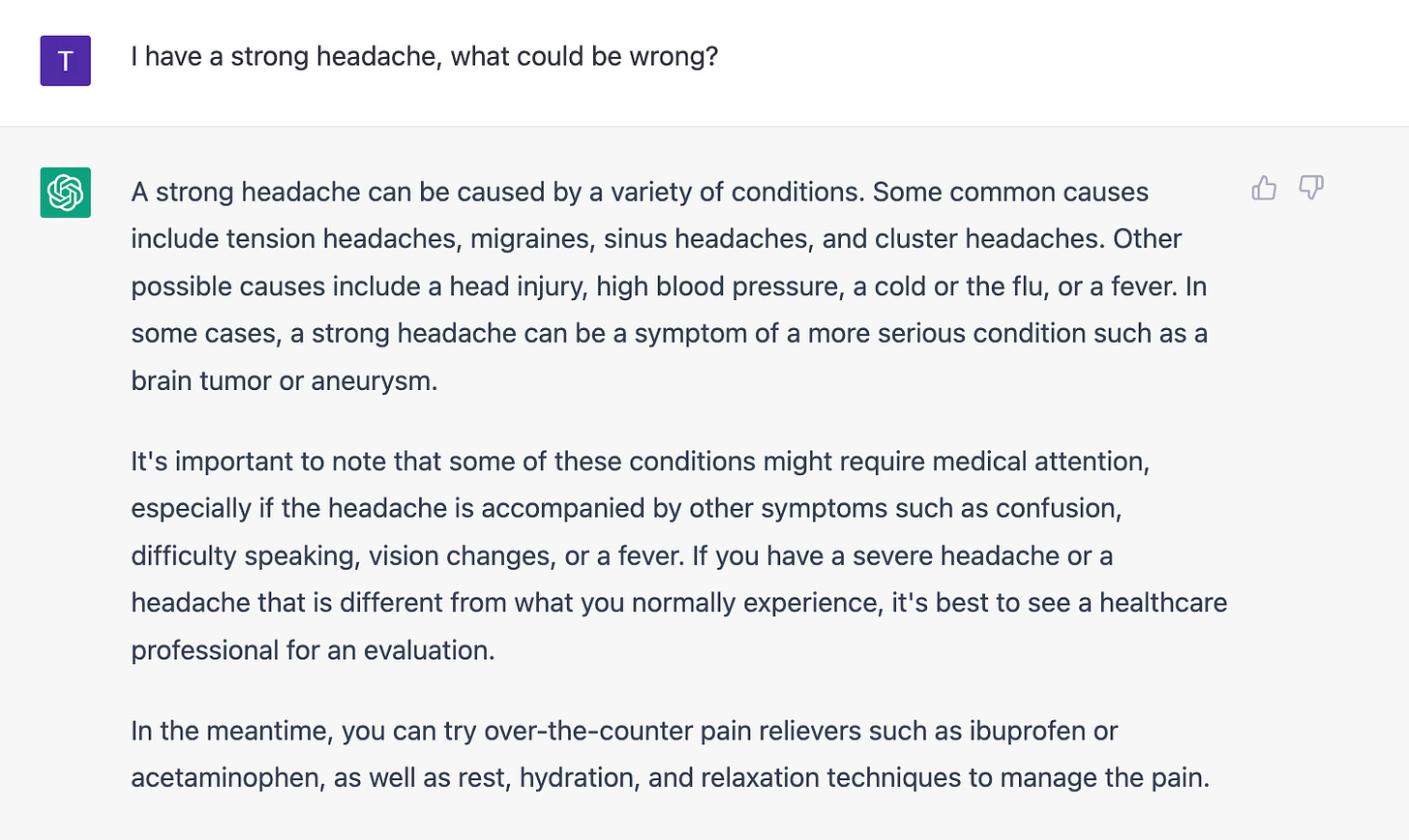

This is quite different from a discussion with ChatGPT in two ways: with a symptom checker, the choice of responses is predefined - you choose among offered answers. But the symptom checker asks follow-up questions to narrow down the possible causes of a problem, whereas ChatGPT only replies without asking back. So the user is the one that needs to try to keep asking additional questions for more information:

What does this mean for access to healthcare and diagnostics? The 2022 systematic review of symptom checkers published in Nature concludes that symptom checkers’ diagnostic and triage accuracy varied substantially and was generally low. Variation exists between different symptom checkers and the conditions being assessed but raises safety and regulatory concerns.

“First and foremost, we don't believe that any technology is going to ultimately replace doctors. When you read a lot of these studies, what they don't go into is what happens when you make this data available to the clinicians themselves. What we found is that by putting the results of our assessment in clinicians’ hands, it helps them both become more accurate in their ultimate diagnosis, but also in considering certain conditions that they may not be as familiar with,” says Jeff Cutler, CCO of Ada Health - the world's most popular symptom assessment app, with 10 million users and 25 million completed assessments.

Symptom Checkers as the Modern Triage

Every three seconds, someone turns to Ada for personal health guidance. “We currently cover over 3,600 conditions, and that maps to over 31,000 ICD10 codes here in the United States. So just to put that in perspective, we believe that's at least more than twice as much as other symptom checkers. There are many popular symptom checkers out there that are really just designed to cover the top 20, 30, 50 conditions that people are experiencing,” says Jeff Cutler.

Ada now serves as the digital front door for Sutter Health and Kaiser Permanente in the US, where patients come to the doctor through an enterprise version of Ada, do an assessment which is then mapped to the actual triage and care and included in the patient’s medical record.

Voice Technology as The New Frontier In Documentation Management

The amount of stored data in medical records is increasing and if paper medical records look like a mess, at least for patients with complex medical histories, electronic health records are often no different. Clinicians copy-paste their clinical assessment and are frustrated by the time-consuming process of note-taking, the lack of data transferability, the difficulty of searching for information, and more.

Many companies offer solutions for easier patient data management, presenting the right information at the right time, and helping doctors in their work. The heavily anticipated tool that would make many of the workflow steps easier is voice technology.

The promise of voice is great: imagine a doctor speaking to her patient while her words get correctly transcribed, interpreted, and recorded in a structured way in a clinical system. Thanks to Natural Language Processing (NLP)1, voice-to-text translations are increasingly more accurate and detailed.

Voice technology and voice recognition could be roughly divided into three categories, says Punit Singh Soni, CEO of Suki, a US-based company providing doctors with an AI voice assistant.

The past generation of voice technologies primarily offers speech recognition and enable transcriptions of voice.

New ambient technology involves human transcribers and doesn’t scale well.

Digital assistant technology uses AI to understand the intent of the speaker.

Smartphones and Laptops as the Only Needed Hardware For Voice to Text Capture

Some voice assistants require special hardware equipment, such as specialized dictation tools and directional microphones that only pick up sound primarily from one direction and reject sounds from other directions, making them useful for isolating a specific sound source, such as a person speaking in a noisy hospital environment. This can be an additional burden in terms of cost and needed training of users for proper use. To avoid this issue, Suki Assistant uses existing equipment a clinician might have - a smartphone or the laptop’s microphone.

When the clinician dictates a note, that note is automatically structured in the medical record with the help of Suki’s proprietary natural language processing model. It extracts data and structures it based on IMO terminology, which is recognized by all large EHR systems in the US. “Suki works across 30+ specialties. By using a different language model for specialty, you eliminate errors that are cross-specialty,” says Punit Singh Soni.

What’s Next After Transcribing And Data Structuring?

What we can’t do with voice today but might be able to in the future is search queries, deploying systems that will detect the speaker’s intent and act accordingly in contrast with the current control and command approaches, says Singh Soni. “There's a lot of skills that voice tech needs to acquire to make it an even better digital assistant. Ultimately you have to figure out how to get into patients’ lives. Today we are clinically focused, but one day, there's no reason why the patient when they're walking to the clinic, can't say, ‘here's why I'm coming. Here are the issues I have.’ The system can create an intake form, give it to the doctor, and the doctor then says, ‘give me a summary of the patient. What is it that they want?’ And then it helps them that. The doctor uses voice too. All of this is coming.”

And then there was ChatGPT and MedPaLM

This brings us to Large Language Models (LLM) - something most of the world is now aware of due to ChatGPT. When OpenAI released ChatGPT and made it available to the public in November 2022, nobody anticipated the revolution the LLM would cause. Researchers say the AI tool achieved over 50 per cent in one of the most difficult standardized tests around: the US medical licensing exam. In healthcare, clinicians in the US tested it for writing insurance payment claims that would justify a procedure they did on a patient. After two months, the list of promises and hopes for use cases includes a summarization of healthcare records to get a short overview of the patient’s medical history, diagnosis propositions, simplifying jargon-dense reports etc.

However, caution is advised.

On 11 December, Sam Altman, the CEO and Founder of OpenAI, the organization that created ChatGPT, wrote a tweet in which he emphasized that ChatGPT should not be relied upon for important queries because it’s often factually inaccurate. It’s also a general model.

So what can and can’t ChatGPT be used for today?

How do Lange Language Models Work In The First Place?

Large language models are developed in several stages: first, the model learns with many inputs - information and documents. You then test the model and finetune it “manually” through a human-supervised process called reinforcement learning.

Less than a month after the release of ChatGPT, Google published a paper about its own Large Language Model, MedPaLM. Unlike ChatGPT, which was trained by a large number of internet documents to form a broad understanding of the human language, MedPaLM is specifically designed for medicine.

In the case of MedPaLM, Google first had a broad language model called Flan-Palm. They then applied this model to a database called MultimedQA, which includes six existing open-question answering datasets spanning professional medical exams, research, consumer queries, and medical questions asked online. This final model is MedPaLM, which according to the authors of the paper, performs encouragingly, but remains inferior to clinicians.

The future of NLP rests in combinations of LLMs with smaller niche language models.

Before we continue; what does Large mean in the context of LLMs? “GPT-3 (Generative Pre-trained Transformer 3 language model, predecessor of ChatGPT) has 175 billion parameters. 60% of the training data comes from Common Crawl, a data set of 380 terabytes. This is about 10,000 times the size of Wikipedia. This is the scale we mean when we talk about large language models,” explains Alexandre Lebrun, CEO of Nabla.

What Can We Expect From ChatGPT in Healthcare and How Will LLM-based Solutions Evolve in the Future?

In January, we invited Alexandre Lebrun - CEO of Nabla and Israel Krush, CEO of Hyro - two companies specializing in AI in healthcare, to join a debate to clarify the state of natural language processing in healthcare.

Not all of it is necessarily good.

If a doctor uses AI to generate a reimbursement request, why wouldn’t the insurance agent use AI to write a compelling rejection? "It's going to be a whole new world. Governmental entities are starting to think about what is allowed, what is not allowed. As a society, we will need to create the right balances or at least how can we deal with fake news, fake entities, fake information that become much easier to generate," commented Israel Krush, CEO of Hyro.

Nabla is a French company that has created an AI-based medical assistant that makes healthcare professionals more efficient. For instance, it automates clinical documentation and patient engagement.

Hyro - monstly present in the US market - is the world's first headache-free conversational AI, especially focused on healthcare. It’s used for automation across call centers, mobile apps, websites and SMS include physician search, scheduling, prescription refills, FAQs and more.

Consequence 1: The Field of LLMs Will Evolve Very Rapidly, Thanks to ChatGPT

Alexandre Lebrun anticipates a new wave of entrants in the natural language processing space because ChatGPT is making the creation of a minimum viable product much easier. “Before large language models, if you wanted to test an idea, you could use machine learning models, but you still needed a minimal amount of data to train the first version. Now, if you have an idea of automating something, you can test it without any data with ChatGPT. It'll be wrong sometimes, but it'll be enough for, say, physicians to imagine themselves using your future products. You can find out if it's valuable and if it's worth investigating further.”

Israel Krush compares this potential to the invention of AWS. “Before AWS, you had to have a bunch of people that knew hardware and were able to build servers in specific rooms and so on and so forth, and all of a sudden, everything is in the cloud, and it's in the click of a button. ChatGPT is not the exact equivalent, but somehow similar in terms of how easy it is today to start an NLP company.”

Startups Can Move Fast by Combining LLMs With Their Smaller Case-Specific Language Models

It is nearly impossible for startups to build their own large language models. Still, they can build smaller models for fine-tuning and creation of their solutions, explains Alexandre Lebrun. “For us as a startup, it's out of the question to train a large language model. We don't have enough computer power. We are talking about tens of millions of dollars for each cycle of training. No normal startup can do that. We can, however, use a large language model and work on how we prompt it. And on the side, we can train our smaller language models, which are very specific to our task. There are different weapons we can use, but clearly, the game is different now than it was a year ago. I think the startups who are learning the fastest how to use these things will eventually win the new game,” says Alexandre Lebrun.

Here are a few additional questions the two experts answered in the debate. You can watch it on Youtube or listen on iTunes or Spotify.

What is the Most Difficult Thing for Companies Developing LLMs in Healthcare?

Alexandre Lebrun: I think in healthcare, the most difficult thing with machine learning models, in general, is that it is very slow and complicated and expensive to get data and also feedback from users. So, for instance, if I change my model to generate the clinical documentation after a consultation, it's very difficult to know if what we change works better or not because of all the data protection and privacy barriers. The feedback loop is extremely long and expensive because we need to talk to doctors one by one.

Israel Krush: On top of that, one of the big problems conversational AI companies face is preserving context and understanding context, which means being able to respond to context switches. Another thing, less problematic in text, more in voice, is latency and everything related to actually conducting the conversation with potential interruption, meaning that while the assistant is starting to reply to the user if the user interferes in the middle of a response, the model needs to respond appropriately. In voice, when you don't have a visual in front of you, the response has to be in real-time.

How Well Does ChatGPT Fair as a Translator of Medical Jargon to Plain Language Patients Can Understand?

Alexandre Lebrun: LLMs are really good at translations. For example, Google PaLM proved that with just five examples, their model is on par with highly specialized systems for translating English to German. It is so strong without specific training that we can assume that it should be really good at translating medical language into lay patient language because we could see them as two languages.

How Reliable is ChatGPT?

Alexandre Lebrun: Charge GPT is not reliable at all. It makes mistakes, it makes hallucinations, and the danger is that the form is so confident, the language is so perfect that it takes concentration and detailed reading to detect inaccurate or false meanings or the wrong deep reasoning.

Could FDA Approve ChatGPT as a Medical Device?

Alexandre Lebrun: ChatGPT is everything the FDA is having nightmares about. It's not deterministic. We don't understand the how, or why did it gives the output. It's impossible today to prove that ChatGPT will behave or not behave in a certain way in some situations. And it is very hard to prove that it won't suddenly advise a patient to kill himself, for instance. So I think ChatGPT alone will never pass FDA approval or any service approval.

Israel Krush: I don't see ChatGPT in its current form anywhere close to approval. Perhaps some smaller iterations focusing on niche cases that might also be coupled with other technologies, would increase the chances for an approval. But currently, as the name says, it’s a general model. I would also claim that it's like asking whether a specific physician would be FDA-approved regarding symptom checking. Ask three physicians about a complex situation, not an easy one, you'll probably get at least two different answers.

Special thanks for this newsletter goes to ChatGPT, which helped with some explanations, and to data scientists at Better Matic Bernik and Robert Tovornik for their help in preparing for the debate with Alexandre Lebrun and Israel Krush.

Among Other Episodes in the Past Month

Healthcare in 2033: “You can only disrupt healthcare in a non-disruptive way” (Mark Coticchia, Baptist Health)

At the last mHealth Israel Conference in Tel Aviv, Mark Coticchia, who is the Innovation, Technology Commercialization, and Venture Development Leader at Baptist Health Innovations, shared his prediction about healthcare systems and healthcare delivery in the US in 2033.

Read the transcript or tune in:

Natural Language Processing (NLP) - Natural Language Processing (NLP) is a field of artificial intelligence that involves developing algorithms and models that enable computers to understand, interpret, and generate human language. NLP examples include language translation, sentiment analysis, named entity recognition, text summarization, speech recognition and text-to-speech.